minimagen

Imagen

- class minimagen.Imagen.Imagen(unets: Union[Unet, List[Unet], Tuple[Unet, ...]], *, text_encoder_name: str, image_sizes: Union[int, List[int], Tuple[int, ...]], text_embed_dim: Optional[int] = None, channels: int = 3, timesteps: Union[int, List[int], Tuple[int, ...]] = 1000, cond_drop_prob: float = 0.1, loss_type: Literal['l1', 'l2', 'huber'] = 'l2', lowres_sample_noise_level: float = 0.2, auto_normalize_img: bool = True, dynamic_thresholding_percentile: float = 0.9, only_train_unet_number: Optional[int] = None)

Bases:

ModuleMinimal Imagen implementation.

- property device: device

- forward(images, texts: Optional[List[str]] = None, text_embeds: Optional[tensor] = None, text_masks: Optional[tensor] = None, unet_number: Optional[int] = None)

Imagen forward pass. Noises images and then calculates loss from U-Net noise prediction.

- Parameters

images – Images to operate on. Shape (b, c, s, s).

texts – Text captions to condition on. List of length b.

text_embeds – Text embeddings to condition on. Used if

textsis not passed in.text_masks – Text embedding mask. Used if

textsis not passed in.unet_number – Which number unet to train if there are multiple.

- Returns

Loss.

- load_state_dict(*args, **kwargs)

Overrides load_state_dict to place all Unets in Imagen instance on one device when called.

- sample(*args, **kwargs)

- state_dict(*args, **kwargs)

Overrides state_dict to place all Unets in Imagen instance on one device when called.

Unet

- class minimagen.Unet.Base(*args, **kwargs)

Bases:

UnetBase image generation U-Net with default arguments from original Imagen implementation.

dim = 512

dim_mults = (1, 2, 3, 4),

num_resnet_blocks = 3,

layer_attns = (False, True, True, True),

layer_cross_attns = (False, True, True, True),

memory_efficient = False

- defaults = {'dim': 512, 'dim_mults': (1, 2, 3, 4), 'layer_attns': (False, True, True, True), 'layer_cross_attns': (False, True, True, True), 'memory_efficient': False, 'num_resnet_blocks': 3}

- class minimagen.Unet.BaseTest(*args, **kwargs)

Bases:

UnetBase image generation U-Net with default arguments intended for testing.

dim = 8

dim_mults = (1, 2)

num_resnet_blocks = 1

layer_attns = False

layer_cross_attns = False

memory_efficient = False

- defaults = {'dim': 8, 'dim_mults': (1, 2), 'layer_attns': False, 'layer_cross_attns': False, 'memory_efficient': False, 'num_resnet_blocks': 1}

- class minimagen.Unet.Super(*args, **kwargs)

Bases:

UnetSuper-Resolution U-Net with default arguments from original Imagen implementation.

dim = 128

dim_mults = (1, 2, 4, 8),

num_resnet_blocks = (2, 4, 8, 8),

layer_attns = (False, False, False, True),

layer_cross_attns = (False, False, False, True),

memory_efficient = True

- defaults = {'dim': 128, 'dim_mults': (1, 2, 4, 8), 'layer_attns': (False, False, False, True), 'layer_cross_attns': (False, False, False, True), 'memory_efficient': True, 'num_resnet_blocks': (2, 4, 8, 8)}

- class minimagen.Unet.SuperTest(*args, **kwargs)

Bases:

UnetSuper-Resolution U-Net with default arguments intended for testing.

dim = 8

dim_mults = (1, 2)

num_resnet_blocks = (1, 2)

layer_attns = False

layer_cross_attns = False

memory_efficient = True

- defaults = {'dim': 8, 'dim_mults': (1, 2), 'layer_attns': False, 'layer_cross_attns': False, 'memory_efficient': True, 'num_resnet_blocks': (1, 2)}

- class minimagen.Unet.Unet(*, dim: int = 128, dim_mults: tuple = (1, 2, 4), channels: int = 3, channels_out: Optional[int] = None, cond_dim: Optional[int] = None, text_embed_dim=512, num_resnet_blocks: Union[int, tuple] = 1, layer_attns: Union[bool, tuple] = True, layer_cross_attns: Union[bool, tuple] = True, attn_heads: int = 8, lowres_cond: bool = False, memory_efficient: bool = False, attend_at_middle: bool = False)

Bases:

ModuleU-Net for use as a denoising model trained via Diffusion. See also

minimagen.diffusion_model.GaussianDiffusion- forward(x: tensor, time: tensor, *, lowres_cond_img: Optional[tensor] = None, lowres_noise_times: Optional[tensor] = None, text_embeds: Optional[tensor] = None, text_mask: Optional[tensor] = None, cond_drop_prob: float = 0.0) tensor

Unet forward pass.

- Parameters

x – Input images. Shape (b, c, s, s).

time – Timestep to noise to for each image. Shape (b,)

lowres_cond_img – (Upsampled) low-res conditioning images for super-res models. Shape (b, c, s, s)

lowres_noise_times – Time to noise to for low-res noise conditioning augmentation. Shape (b,).

text_embeds – Conditioning text embeddings. Size (b, 256, embedding_dim). See

minimagen.t5.t5_encode_text().text_mask – Text mask for text embeddings. Shape (b, 256)

cond_drop_prob – Probability of dropping conditioning info for classifier-free guidance. Generally in the range [0.1, 0.2].

- Returns

Denoised images. Shape (b, c, s, s).

- forward_with_cond_scale(*args, cond_scale: float = 1.0, **kwargs) tensor

Adds classifier-free guidance to the forward pass.

- Parameters

args – Arguments to pass to forward

cond_scale –

Conditioning scale.

cond_scale = 0=> unconditional model.cond_scale = 1=> standard conditional model.cond_scale > 1=> large guidance weights improve image quality/fidelity at the cost of diversity.

See here for more information.

kwargs – Keyword arguments to pass to

forward

- Returns

Guided images. Shape (b, c, s, s).

Diffusion Model

- class minimagen.diffusion_model.GaussianDiffusion(*, timesteps: int)

Bases:

Module- predict_start_from_noise(x_t: tensor, t: tensor, noise: tensor) tensor

Given a noised image and its noise component, calculated the unnoised image

x_0.- Parameters

x_t – Noised images. Shape (b, c, s, s).

t – Timestep for each image. Shape (b,).

noise – Noise component for each image. Shape (b, c, s, s).

- Returns

Un-noised images. Shape (b, c, s, s).

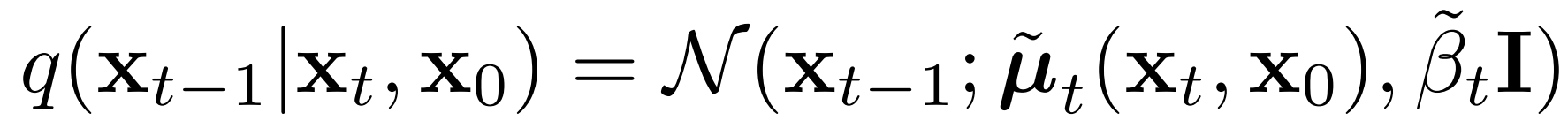

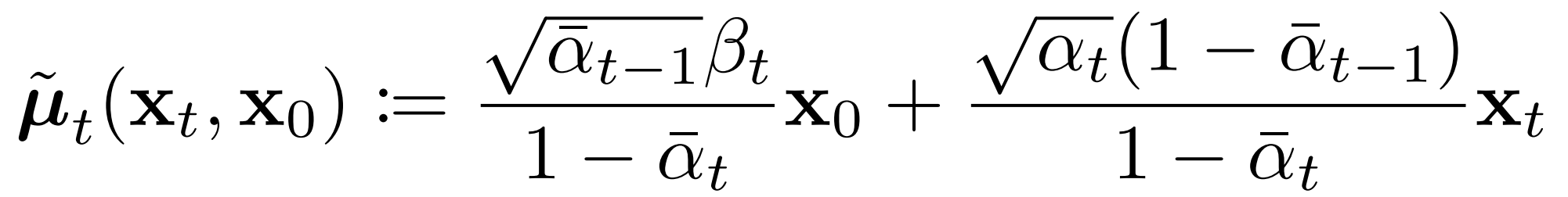

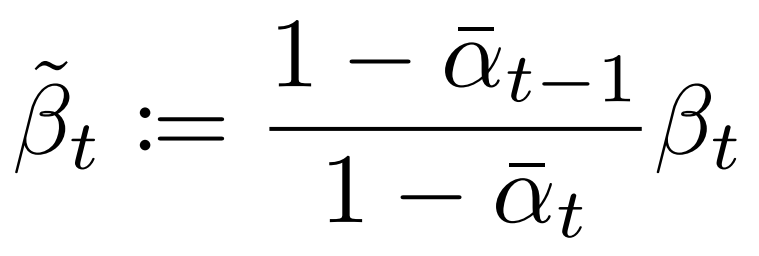

- q_posterior(x_start: tensor, x_t: tensor, t: tensor) tuple[torch._VariableFunctionsClass.tensor, torch._VariableFunctionsClass.tensor, torch._VariableFunctionsClass.tensor]

Calculates q_posterior parameters given a starting image

x_start(x_0) and a noised imagex_t.

Where the mean is

And the variance prefactor is

- Parameters

x_start – Original input images x_0. Shape (b, c, h, w)

x_t – Images at current time x_t. Shape (b, c, h, w)

t – Current time. Shape (b,)

- Returns

Tuple of

posterior mean, shape (b, c, s, s),

posterior variance, shape (b, 1, 1, 1),

clipped log of the posterior variance, shape (b, 1, 1, 1)

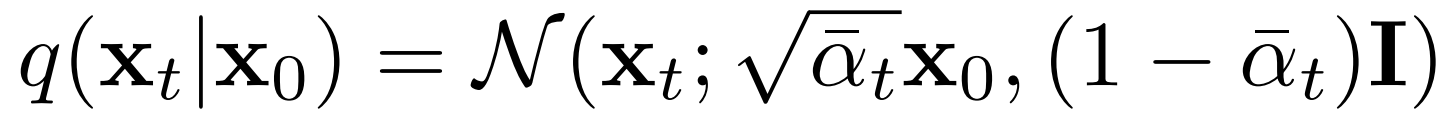

- q_sample(x_start: tensor, t: tensor, noise: Optional[tensor] = None) tensor

Sample from q at a given timestep:

- Parameters

x_start – Original input images. Shape (b, c, h, w).

t – Timestep value for each image in the batch. Shape (b,).

noise – Optionally supply noise to use. Defaults to Gaussian. Shape (b, c, s, s).

- Returns

Noised image. Shape (b, c, h, w).

T5

- minimagen.t5.get_encoded_dim(name: str) int

Gets the encoding dimensionality of a given T5 encoder.

- minimagen.t5.t5_encode_text(text, name: str = 't5_base', max_length=256)

Encodes a sequence of text with a T5 text encoder.

- Parameters

text – List of text to encode.

name –

Name of T5 model to use. Options are:

't5_small'(~0.24 GB, 512 encoding dim),'t5_base'(~0.89 GB, 768 encoding dim),'t5_large'(~2.75 GB, 1024 encoding dim),'t5_3b'(~10.6 GB, 1024 encoding dim),'t5_11b'(~42.1 GB, 1024 encoding dim),

- Returns

Returns encodings and attention mask. Element [i,j,k] of the final encoding corresponds to the k-th encoding component of the j-th token in the i-th input list element.

Training

- minimagen.training.ConceptualCaptions(args, smalldata=False, testset=False)

Load conceptual captions dataset

- Parameters

args – Arguments Namespace/dictionary parsed from

get_minimagen_parser()smalldata – Whether to return a small subset of the data (for testing code)

testset – Whether to return the testing set (vs training/valid)

- Returns

test_dataset if

testsetelse (train_dataset, valid_dataset)

- class minimagen.training.MinimagenCollator(device)

Bases:

object

- class minimagen.training.MinimagenDataset(hf_dataset, *, encoder_name: str, max_length: int, side_length: int, train: bool = True, img_transform=None)

Bases:

Dataset

- minimagen.training.MinimagenTrain(timestamp, args, unets, imagen, train_dataloader, valid_dataloader, training_dir, optimizer, timeout=60)

Training loop for MinImagen instance

- Parameters

timestamp – Timestamp for training.

args – Arguments Namespace/dict from argparsing

minimagen.training.get_minimagen_parser()parser.unets – List of :class:`~.minimagen.Unet.Unet`s used in the Imagen instance.

imagen –

Imageninstance to train.train_dataloader – Dataloader for training.

valid_dataloader – Dataloader for validation.

training_dir – Training directory context manager returned from

create_directory().optimizer – Optimizer to use for training.

timeout – Amount of time to spend trying to process batch before passing on to the next batch. Does not work on Windows.

- Returns

- minimagen.training.create_directory(dir_path)

- Creates a training directory at the given path if it does not exist already and returns a context manager that

allows user to temporarily enter the directory (or a subdirectory) to e.g. modify files. Also creates subdirectories “parameters”, “state_dicts”, and “tmp” under the parent directory which can be similarly temporarily accessed by supplying a given subdirectory name to the returned context manager as an argument.

- Parameters

dir_path – Path of directory to create

- Returns

Context manager to access created training directory/subdirectories

- minimagen.training.get_default_args(object)

Returns a dictionary of the default arguments of a function or class

- minimagen.training.get_minimagen_dl_opts(device)

Returns dictionary of default MinImagen dataloader options

- minimagen.training.get_minimagen_parser()

Returns parser for MinImagen training

- minimagen.training.get_model_params(parameters_dir)

Returns the U-Net parameters and Imagen parameters saved in a “parameters” subdirectory of a training folder.

- Parameters

parameters_dir – “parameters” subdirectory from which to load.

- Returns

(unets_params, im_params) where unets_params is a list where the parameters index corresponds to the Unet number in the Imagen instance.

- minimagen.training.get_model_size(imagen)

Returns model size in MB

- minimagen.training.load_restart_training_parameters(args, justparams=False)

- Load identical command line arguments when picking up from a previous training for relevant arguments. That is,

ensures that

--MAX_NUM_WORDS,--IMG_SIDE_LEN,--T5_NAME,--TIMESTEPScommand line arguments fromget_minimagen_parser()are all identical to the original training when resuming from a checkpoint.

- Parameters

args – Arguments Namespace returned from parsing

get_minimagen_parser().justparams – Whether loading from a parameters directory rather than a full training directory.

- minimagen.training.load_testing_parameters(args)

- Load command line arguments that are conducive to testing training scripts (i.e. low computational load).

In particular, the following attributes of

argsare changed to the specified values:BATCH_SIZE = 2

MAX_NUM_WORDS = 32

IMG_SIDE_LEN = 128

EPOCHS = 2

T5_NAME = ‘t5_small’

TRAIN_VALID_FRAC = 0.5

TIMESTEPS = 25

OPTIM_LR = 0.0001

- Parameters

args – Arguments Namespace returned from parsing

get_minimagen_parser().

- minimagen.training.save_training_info(args, timestamp, unets_params, imagen_params, model_size, training_dir)

Saves training info to training directory

- Parameters

args – Arguments Namespace/dict from argparsing

get_minimagen_parser()parser.timestamp – Training timestamp

unets_params – List of parameters of Unets to save.

imagen_params – Imagen parameters to save

training_dir – Context manager returned from

create_directory()

- Returns

Generate

- minimagen.generate.load_minimagen(directory)

Load a

MinImagen. instance from a training directory.- Parameters

directory – MinImagen training directory as structured according to

minimagen.training.create_directory().- Returns

MinImageninstance (ready for inference).

- minimagen.generate.load_params(directory)

Loads Unets and Imagen parameters from a training directory

- Parameters

directory – Path of training directory generated by training

- Returns

(unets_params, imagen_params) where unets_params is a list whose i-th element are the parameters of the i-th Unet in the Imagen instance.

- minimagen.generate.sample_and_save(captions: list, *, minimagen: Optional[Imagen] = None, training_directory: Optional[str] = None, sample_args: dict = {}, save_directory: Optional[str] = None, filetype: str = 'png')

- Generate and save images for a list of captions using a

MinImageninstance. Images are saved into a “generated_images” directory as “image_<CAPTION_INDEX>.<FILETYPE>”

- Parameters

captions – List of captions (strings) to generate images for.

minimagen –

MinImageninstance to use for sampling (i.e. generating images). Must specify one ofminimagenortraining_directory.training_directory – Training directory of MinImagen instance to use for inference. Must specify one of

minimagenortraining_directory.sample_args – Additional keyword arguments to pass for

Imagen.samplefunction. Do not includetextsor code:return_pil_images in this dictionary.save_directory – Directory to save images to. Defaults to datetime-stamped directory if not specified.

filetype – Filetype of saved images.

- Returns

- Generate and save images for a list of captions using a